Erasmus Mundus Retrospective:

Coursework in Computational Linguistics

- Introduction

- Living in Prague

- Coursework in Computational Linguistics « You are here

- Extracurricular Projects

- Exploring Europe

- Closing Thoughts

The LCT Group at Charles University

If there were any single factor that got me through the hard parts of the program in Prague, it would have to be my LCT classmates. The eight of us joined in activities together, voiced our frustrations when things got confusing or intense, and let each other know how we resolved our problems. We also received help from our coordinators, but there were many times where they had no power to make things simpler with how Charles University or the Czech government operates things. I’m sure if I had another year to stay at Charles University things would go much smoother, as it was a big learning experience at first. Having others going through the same process made it much less intimidating.

We were all enrolled as students of the Institute of Formal and Applied Linguistics (UFAL) as part of the Department of Mathematics and Physics at Charles University; all of the UFAL classes were taught in English in the CS building. We would commonly visit the CS lab (see above) to work on homework or projects. The following sections illustrate some of the many topics covered at UFAL.

Linguistics

Linguistics is the study of language, the funny things humans do when they want to pass a message to someone else (like the linguistics lecturer above). Language can come in many forms: from speaking and writing to hand signs. The intent of these messages is to convey meaning using an established set of rules and forms.

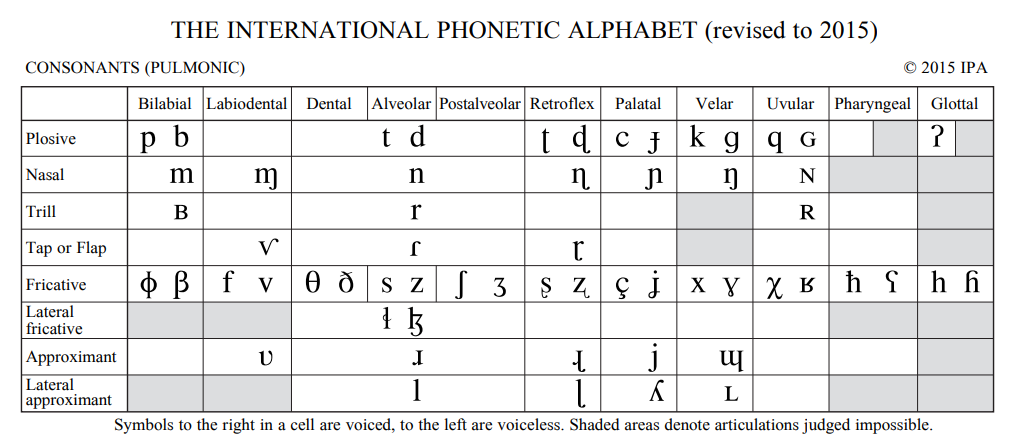

Phonetics

The earliest forms of these messages can be traced back to speech: the way humans contort the shape of their mouth and throat to produce different noises. Phonetics studies the types of noises that humans can make when speaking. There’s an entire chart dedicated to mapping out all the sounds one could possibly make (unless you’re an AI reading this, of course).

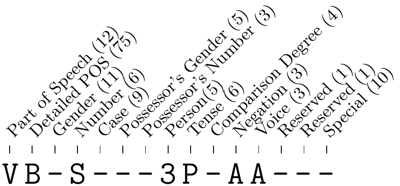

Morphology & Syntax

“Donaudampfschifffahrtselektrizitätenhauptbetriebswerkbauunterbeamtengesellschaft.” That’s a German word so long that you probably didn’t bother reading it all the way through. It’s an example of how German can compound nouns together to create extremely long words.

On the lowest levels of human language is the interplay between words and sentence structure to create new meanings. Morphology studies words and how they can change forms (e.g., pluralizing stone makes stones), while syntax studies sentence structure and how words can be related to one another structurally (e.g., two in two birds modifies birds). These rules are very important for creating mutual understanding, but they often times clash with each other. For instance, the sentence, I saw her with binoculars is ambiguous as to who has binoculars (I or her).

Semantics

Without meaning, language would be reduced to a bunch of noise. Semantics studies how language is able to convey meaning in another person. This is one of the areas of linguists know least about. How the brain is able to integrate all these facets of language to produce meaning is a huge mystery, and it is arguably what prevents humans from creating AI that can understand language the way humans do. But that isn’t to say there hasn’t been a lot of progress made in the field. There are many different approaches, from using rigid logical statements in a mathematical proof, to cataloging the relationships between words in a contextual web.

Natural Language Processing

Linguistics is only the first half of computational linguistics. The other half requires a firm understanding of how computers work. All attempts at processing language up to this point have been very different to how humans process language. This isn’t always a limitation, and can sometimes be a strength. Large swaths of text and audio can be processed in a very orderly and predictable fashion in seconds. Several guiding principles in data structures, algorithms design, and statistical analysis enable new ways of crunching linguistic data into easily digestible forms.

Deep Learning

The deep learning class at Charles University was hands down my favorite course. Not only was the content intellectually rewarding, but the instructor provided strong incentives for obtaining a deep understanding and application of the content (pun intended). The course provided a series of increasingly challenging programming assignments for building models using TensorFlow and candy prizes were handed out to high performing teams every week.

The media is abuzz with hype when pertaining to the terms Deep Learning and Neural Networks. Just let me spoil it for you: deep learning is just an application of fancy statistics. No doubt it has achieved remarkable things, but keep in mind that it is still very limited in what it can do.

Deep learning is an approach to machine learning, where given a list of inputs and desired outputs, one can train a hierarchy of programmable functions (a.k.a. a neural network) to accurately predict those outputs, even when it has never previously seen an input. This enables the neural network to perform tasks like classifying an image as a dog or cat, transcribing speech audio to text, or playing a board game.

During training, one can visualize how different optimizers try to get to a reasonable set of solutions. I like to imagine reaching a solution as a ball rolling down a bumpy hill.

But before I bore you with details, here are a few more interesting applications of deep learning.

Neural networks can quickly transfer the style of a painting to a given video.

Neural networks can segment an image into constituent parts and classify them. In this instance, it is being used for a self-driving-car simulation.

Neural networks can generate images of faces they have never seen before, and can even interpolate between facial features like hair color and skin tone.

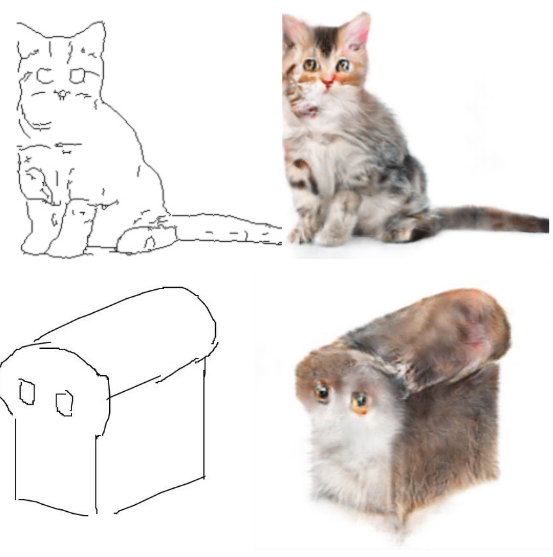

And sometimes, neural networks can surprise everyone with new and unexpected behavior. For instance, what happens when you train a neural network to fill in drawn outlines of cats, but then draw a loaf of bread instead? You get #breadcat #catloaf.

Speech Processing

Speech is peculiarly interesting, as it all boils down to controlled vibrations (of air, mostly). A speech waveform tells one how much they need to push or pull a membrane over time to produce the same sound. Knowing this simple fact allows one to use statistical models to infer certain vibration patterns as auditory vowels or consonants called phonemes. Stringing together these phonemes provides the ability to infer word forms and finally sentences. This process is known as Automatic Speech Recognition, i.e., translating speech waveforms to text.

On the flip side, it’s also possible to translate text back into speech. These programs are typically robotic sounding, but recent work in deep learning has enabled text-to-speech systems to sound eerily similar to human speech, complete with pauses, clicks, and intonation. One of these approaches is called WaveNet, which applies a filter over previously generated audio to generate the next tiny segment of speech.

Take a listen for yourself. The audio below was generated entirely by a computer.

Machine Translation

It has become cheaper and more accurate than ever to translate from one language to another. Services like Google Translate make it dead easy to find out what “Вы похожи на коровы” means without resorting to a human translator. Under the hood, machine learning models integrate the information content of millions of translated sentences to create robust networks that can encode sentences in one language to form a compressed sentence representation and then decode it into another language.

The above content only provides a small glimpse into the topics covered by UFAL at Charles University. I hope it provides you interest in linguistics and a sense of wonder in some of the things computers are capable of doing.

Outside of classes, I also managed to fit in a few extra projects.